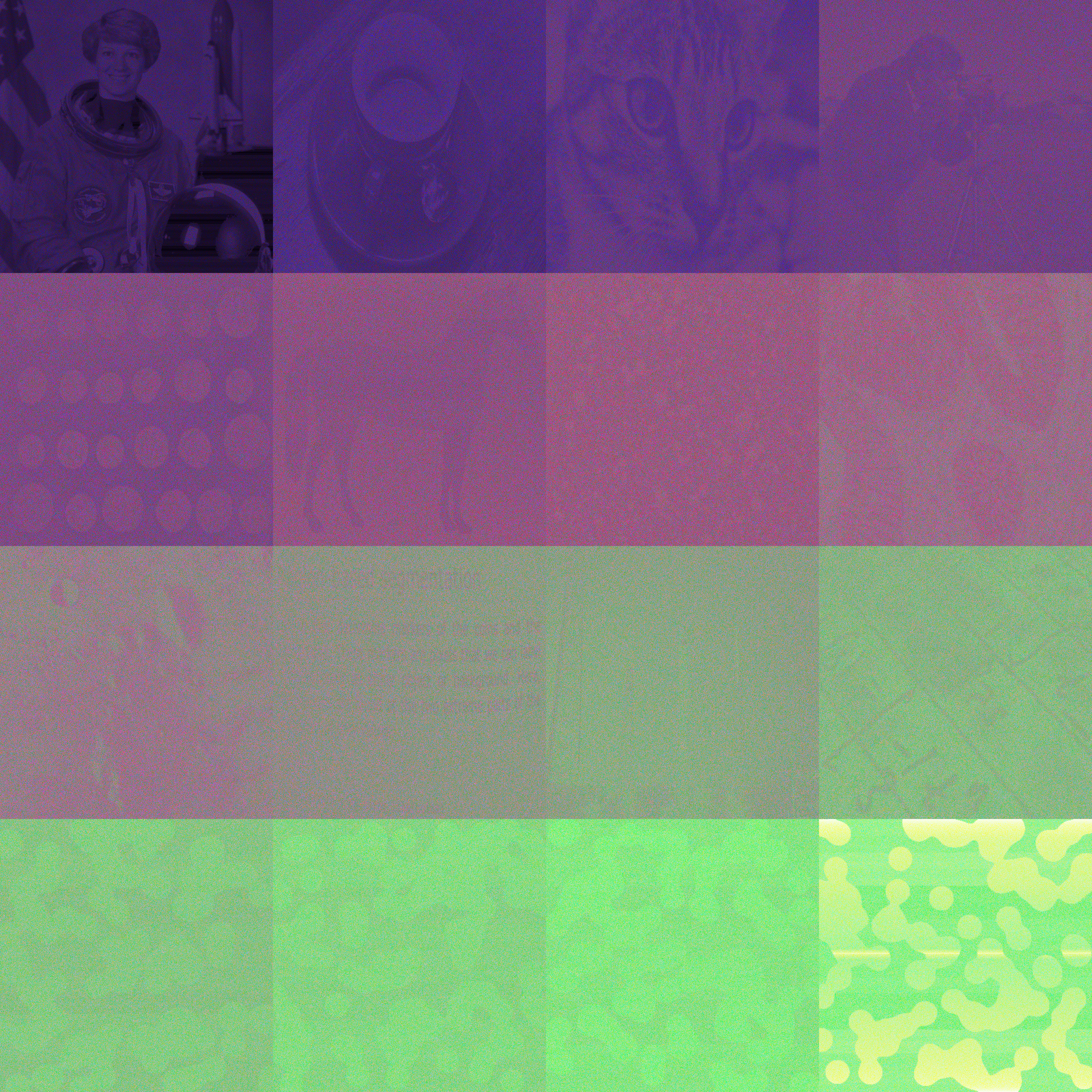

This image might not be as sophisticated as other photographic entries like Cat (2024), Wedding Table (2013), or even VibrantMustard (2009), but I was quite surprised by a) how quickly Chatgpt o4 was able to come up with working Python code, and b) how fast Google Colab was able to execute said code. I basically prompted a question, and pasted the answer in another browser tab, the whole ordeal taking mere minutes. (This content is not sponsored.)

For what its worth, this image arguably does look better than M.I, which—from as far as I can tell—was the first ever image to contain all 24-bit rgb colors exactly once, while representing something that could be considered photographic.

!pip install -q scikit-image import numpy as np from PIL import Image from skimage import data, color from skimage.util import img_as_float, img_as_ubyte from skimage.transform import resize import itertools

def get_standard_images(): def generate_blob(seed): np.random.seed(seed) blob = data.binary_blobs(length=512, volume_fraction=0.5) return color.gray2rgb(blob.astype(float)) images = [ data.astronaut(), data.coffee(), data.chelsea(), color.gray2rgb(data.camera()), color.gray2rgb(data.coins()), color.gray2rgb(data.horse()), data.hubble_deep_field(), data.immunohistochemistry(), color.gray2rgb(data.moon()), color.gray2rgb(data.page()), data.rocket(), color.gray2rgb(data.text()), generate_blob(1), generate_blob(2), generate_blob(3), generate_blob(4), ] resized = [] for img in images: img = img_as_float(img) resized_img = resize(img, (1024, 1024), anti_aliasing=True) resized.append(img_as_ubyte(resized_img)) return resized

def generate_sorted_rgb(): rgb = np.array(list(itertools.product(range(256), repeat=3)), dtype=np.uint8) lab = color.rgb2lab(rgb.reshape(-1, 1, 3)).reshape(-1, 3) sorted_indices = np.lexsort((lab[:, 2], lab[:, 1], lab[:, 0])) return rgb[sorted_indices]

def map_colors_to_images(images, sorted_rgb): final_image = np.zeros((4096, 4096, 3), dtype=np.uint8) idx = 0 for i in range(4): for j in range(4): block = images[i * 4 + j] block_lab = color.rgb2lab(block) lightness = block_lab[:, :, 0].flatten() color_chunk = sorted_rgb[idx:idx + 1024 * 1024] idx += 1024 * 1024 chunk_lab = color.rgb2lab(color_chunk.reshape(-1, 1, 3)).reshape(-1, 3) sorted_chunk = color_chunk[np.argsort(chunk_lab[:, 0])] mapped_block = sorted_chunk[np.argsort(np.argsort(lightness))].reshape(1024, 1024, 3) final_image[i*1024:(i+1)*1024, j*1024:(j+1)*1024] = mapped_block return final_image

images = get_standard_images() sorted_rgb = generate_sorted_rgb() final_image = map_colors_to_images(images, sorted_rgb) img = Image.fromarray(final_image) img.save("allrgb_test_grid_4096x4096.png") img.show()

unique_colors = len(np.unique(final_image.reshape(-1, 3), axis=0)) print("Unique RGB colors:", unique_colors) # should be 16,777,216

I did not need to write any code myself or even look anything up for this one. I purposely also did not try to optimize or otherwise improve upon the result, because I want this to be the benchmark from which we can see how far this approach will eventually take us, which undoubtedly will be quite far.

| Date | |

|---|---|

| Colors | 16,777,216 |

| Pixels | 16,777,216 |

| Dimensions | 4,096 × 4,096 |

| Bytes | 49,734,627 |